MCP 101: An Introduction to the MCP Standard

A beginner's guide to every major concept in MCP according to an MCP developer.

Overview of MCP

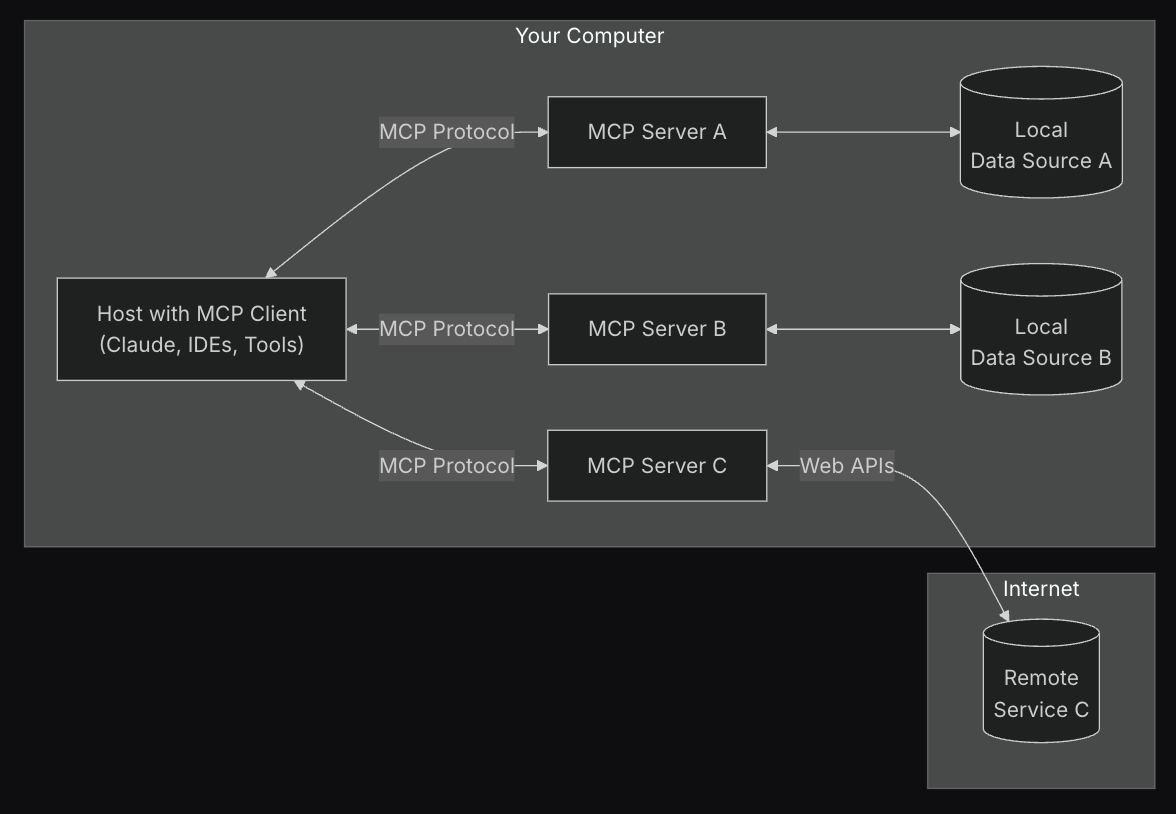

With recent developments in AI model stability and standards for agentic AI, it has become easier than ever to develop your own AI-native applications and software. In particular, MCP (Model Context Protocol) allows developers to build connectors to any digital system (via "servers") that AI models can access through user applications (aka "clients"). In this guide, we will cover every major aspect of MCP so you understand the protocol at its core. This is intended to be an informative overview, and future posts will go into more depth on the technical steps to build servers and clients.

Hosts, Clients and Servers

MCP has several components that play a part in the overall architecture:

Hosts are the applications that run one or more clients and servers (ex. VS Code with MCP support would be a "host")

Clients are the connectors between AI models and servers, and are 1:1 with the number of servers

Servers are the processes running either inside a host or as a remote process (hence the name "server") which expose the functionality of other software or services that you want your AI model to have access to

The MCP host application manages the models and the conversational interface, the clients manage communications, and the servers handle the specialized integrations with external systems. Altogether, the components create a system for AI agents to perform arbitrary functions like accessing databases, manipulating a CRM, or processing files on your local computer.

Transport Layers

Transports are the technical solution for how messages are exchanged between MCP clients and servers. The different transports can accommodate different deployment scenarios and technical requirements, although all use JSON-RPC 2.0 as the message format for consistency.

Standard Input/Output (stdio)

Stdio is the simplest transport option, great for local processes and command-line tools. If your service only needs simple process communication, stdio provides a lightweight solution with minimal overhead. It's particularly useful for scripts and local integrations where you're spawning servers as child processes.

HTTP+SSE

This transport mode combines HTTP endpoints with SSE responses for multi-message server-to-client streaming. It is being deprecated in favor of the Streamable HTTP mode described below, so new servers and clients should prefer Streamable HTTP where possible. HTTP+SSE works well in web environments and restricted networks where full bidirectional protocols might be blocked. However, SSE implementations require careful security considerations beyond those of standard HTTP.

Streamable HTTP

Streamable HTTP is the newest transport method, combining the best parts of HTTP+SSE and multi-client connections. Streamable HTTP also enshrines persistent memory with session management, allowing servers to preserve context between calls within a single session or workflow.

Custom Transports

The protocol also supports custom transports for specialized needs, which can be optionally supported by clients and servers. This works best when you are developing an application in-house, since you can control the transport methods supported on both the client and server sides.

Features

Tools

Tools represent the action-oriented side of MCP. They are executable functions that AI models can invoke to interact with external systems, perform computations, and take real-world actions. Unlike resources which provide static context (explained later on), tools are dynamic operations that can modify state and trigger workflows.

The power of tools lies in their model-controlled nature. The AI model can automatically decide when and how to use tools, with appropriate human oversight through approval mechanisms. This enables sophisticated agentic behaviors where the AI can reason about what actions to take and execute them through an MCP server.

Tools are defined within each MCP server through a specific JSON Schema definition that includes a list of tools, parameters used to call the tools, and some annotations about how each tool can be utilized. For example:

{

name: "create_database_record",

description: "Create a new customer record in the CRM",

inputSchema: {

type: "object",

properties: {

customerName: { type: "string" },

email: { type: "string", format: "email" },

companySize: { type: "number", minimum: 1 }

},

required: ["customerName", "email"]

},

annotations: {

title: "Create Customer Record",

destructiveHint: false,

idempotentHint: false

}

}Tool Annotations

Tool annotations provide crucial metadata about tool behavior without affecting what the AI model sees. These hints help client applications understand how to present tools to users and manage approval workflows:

readOnlyHint indicates whether the tool modifies its environment

destructiveHint warns about potentially irreversible operations

idempotentHint signals whether repeated calls have additional effects

openWorldHint indicates interaction with external entities

Implementation Best Practices

If you are a developer interested in building an MCP server, here are some recommendations to keep in mind:

Ensure parameters on the tools closely match the desired functionality

For long-running operations, implement progress reporting methods

Consider rate limiting for resource-intensive tools

Design atomic operations that either complete fully or fail cleanly

Error Handling

Error responses in tools should be informative so the AI model can adjust accordingly. When a tool encounters an error, return an error result with isError: true and descriptive content rather than throwing protocol-level exceptions. This allows the AI model to see what went wrong and potentially take corrective action or request human intervention.

Prompts

Prompts in MCP are predefined templates that standardize common AI interactions and workflows. They are user-controlled, meaning they are explicitly selected by users rather than automatically invoked by AI models. This makes them perfect for creating standardized workflows, onboarding experiences, and complex multi-step processes.

Dynamic Prompt Capabilities

Prompts are intended to make it easier to perform multiple steps in a workflow based on dynamic context from the user:

Embedded Resource Context: Prompts can dynamically include content from MCP resources, allowing them to reference current data, logs, or other configs

Multi-step Workflows: Complex business processes can be encoded as conversational flows

Parameterized Templates: Arguments allow customization while maintaining consistency

For example, a prompt may have the following argument schema:

{

name: "incident-analysis",

description: "Analyze system incident with logs and metrics",

arguments: [

{

name: "timeframe",

description: "Time period to analyze (e.g., '1h', '24h')",

required: true

},

{

name: "service",

description: "Service name to focus analysis on",

required: true

}

]

}When executed, the result of this prompt may be a list of messages from both the user and the assistant, similar to the typical messages argument passed into LLMs today.

UI Integration

Since prompts do not require AI model input, they can be surfaced as slash commands in chat interfaces, quick actions in dashboards, context menu buttons in development tools, or guided workflows in customer onboarding. This flexibility allows you to integrate them naturally into existing user workflows, no matter how the host application is structured.

Resources

Resources provide the context foundation for AI interactions. They include data and content that AI models can reference to understand current state, historical information, and system configurations. Resources are application-controlled, meaning the client application (not the AI model) decides when and how they are used.

Resource Characteristics

Resources have a few key characteristics depending on the type of data, how it is surfaced, and whether it is static or dynamic content:

Type refers to the data type, whether it is text-based or multimodal base64-encoded data (ex. PDFs, images, audio, and video)

Access can either be direct through a list of concrete resources, or dynamic with a list of URI templates that are used to construct valid resource links at run time

Implementation Recommendations

In order to keep content fresh, resources can have real-time updates through subscription mechanisms. Clients can subscribe to specific resources and receive notifications when content changes, enabling AI applications to stay synchronized with rapidly changing data.

When thinking about security, especially for enterprise data, keep the following points in mind:

Implement proper access controls and audit trails

Validate all URI patterns to prevent directory traversal attacks

Sanitize sensitive information before exposure

Roots

Roots define the operational boundaries where MCP servers should focus their attention. They provide a way for clients to inform servers about relevant resources and their locations, creating clear workspace boundaries.

Conceptually, roots are URIs that tell servers where they should operate:

{

"roots": [

{

"uri": "file:///workspace/frontend",

"name": "Frontend Repository"

},

{

"uri": "https://api.company.com/v1",

"name": "Company API"

}

]

}Practical Benefits include:

Clarity: Clear workspace boundaries prevent accidental access to unrelated data

Organization: Multiple roots enable simultaneous work with different systems

Performance: Servers can optimize for known resource locations

Servers should respect provided roots while understanding they are informational rather than strictly enforcing. This balance provides guidance while maintaining flexibility for edge cases and evolving requirements.

Sampling

The final main feature to discuss is Sampling, which enables sophisticated agentic behaviors by allowing MCP servers to request LLM completions through the client instead of the other way around. The Sampling Flow follows a sequence like so:

Server requests completion with specific prompts and context

Client reviews and can modify the request

Client samples from the appropriate LLM

Client reviews and can modify the completion

Client returns the result to the server

Security and control are built into this design. Users maintain complete control over what prompts are sent to AI models and what completions are returned to servers. This approach ensures that sampling enables powerful capabilities while preserving user agency and preventing unauthorized AI usage.

Agentic patterns enabled by sampling include reading and analyzing resources, making decisions based on context, generating structured data, handling multi-step tasks, and providing interactive assistance. These capabilities transform MCP servers from simple data providers into intelligent agents that can reason and act beyond the host and client application.

Note: Sampling is not yet supported across most current MCP host applications. This is because it is a complex feature to implement correctly, although I expect there will be use cases for it in more sophisticated applications in the future.

Ecosystem

Exploring Servers

The MCP ecosystem is rapidly growing with servers covering diverse domains from development tools to business applications. Understanding existing servers helps identify patterns and opportunities for your own implementations.

Existing servers can be found from a variety of sources, each with their own advantages/drawbacks:

The core Server Showcase by the MCP team here offers a small window into the potential applications of MCP

Major Aggregator Websites like smithery.ai, mcp.so, mcp.run, and glama.ai all attempt to aggregate servers and clients from across the web, although it's too early to tell if the community will converge on any single aggregator

Sometimes you will also find servers through other means like Web Search or Github Search, although these methods work best when you are looking for a particular server implementation

When exploring existing servers, pay attention to their architecture patterns, security implementations, and UX design. Servers are developed by the community and have no guarantee on quality, so always DYOR.

Official Roadmap

The MCP team has outlined key priorities for the next six months that will significantly impact the ecosystem and development patterns.

Validation Infrastructure investments will include reference client implementations demonstrating protocol features and compliance test suites for automated verification. These tools will help developers implement MCP confidently while ensuring consistent behavior across different implementations.

MCP Registry Development aims to solve discovery and distribution challenges. The planned registry will function as an API layer that third-party marketplaces and discovery services can build upon, making it easier for users to find and install relevant MCP servers. It's unclear what the relationship will be between this registry and existing aggregator sites, whether it is a complete replacement or simply supplementary.

Agent Capabilities expansion includes Agent Graphs for complex agent topologies and Interactive Workflows with improved human-in-the-loop experiences. These capabilities will enable more sophisticated agentic behaviors while maintaining user control and oversight.

Multimodality Support will expand beyond text to include video and other media types, plus streaming support for multipart, chunked messages and bidirectional communication. This expansion opens up new possibilities for interactive AI experiences.

Governance Structures will prioritize community-led development and transparent standardization processes. This includes establishing clear contribution processes and exploring formal standardization through industry bodies.

Conclusion

The Model Context Protocol represents a fundamental shift toward modular, interoperable AI systems. For developers building agentic AI solutions, MCP offers an opportunity to create deep integrations that work across the entire ecosystem rather than being locked to specific platforms.

Key Takeaways for your development strategy:

Start Simple, Think Modular: Begin with focused MCP servers that solve specific integration challenges. Design them to be composable with other servers and extensible as requirements evolve.

Security and Trust: Enterprise customers require robust security implementations. Invest in proper authentication, authorization, audit trails, and data protection from the beginning rather than retrofitting security later.

User Experience Focus: The best MCP servers provide seamless integration into existing workflows. Design for your users' current processes rather than forcing them to adapt to new patterns.

Community Participation: The MCP ecosystem grows stronger with active community participation. Share your servers, contribute to discussions, and collaborate with other developers to establish best practices.

Future-Ready Architecture: Design your servers with the roadmap in mind. Plan for registry integration, enhanced agentic capabilities, and multimodal support even if you don't implement these features immediately.

Ecosystem Opportunity: Early MCP adoption positions you to benefit from the ecosystem's growth. Well-designed servers that solve real problems will gain adoption as the ecosystem matures and tooling improves. If you are developing a host application or client, your application will benefit from the growing list of capabilities built by server developers throughout the web.

Overall, the development of MCP has created unprecedented opportunities for developers who understand how to apply the standard. It provides a concrete foundation for sophisticated AI experiences that are both accessible and scalable. Whether you're building internal tools for your own AI applications or creating servers for the broader ecosystem, MCP enables you to focus on solving real business problems rather than wrestling with integration complexity. The protocol handles the plumbing so you can focus on delivering value.

As the ecosystem evolves with registries, enhanced tooling, and broader platform support, early investments in MCP development will compound. The servers you build today will benefit from tomorrow's infrastructure improvements, and the integration patterns you establish will influence how AI systems interact with business applications for years to come.

Learn More

If you would like to learn more about MCP and prepare for development, check out the following resources (in no particular order):

https://modelcontextprotocol.io: The official reference documentation for Model Context Protocol

https://github.com/modelcontextprotocol: Main Github project page for associated codebases and examples

https://simplescraper.io/blog/how-to-mcp: An expansive guide to server development with concrete technical code samples you can directly copy

https://github.com/modelcontextprotocol/typescript-sdk: The reference SDK for Typescript-based servers and clients, with clear feature demonstrations and code samples in the README

https://github.com/modelcontextprotocol/python-sdk: Same as the Typescript SDK, but for Python (they also have SDKs for Java, Kotlin and C Sharp)

https://www.jsonrpc.org/specification: An overview of the JSON-RPC 2.0 specification, for those who are unfamiliar with it.